Functional Rationals in Scala

This is a small tutorial on buiding a simple Scala library for rational numbers (any number that can be written as a / b, where a and b are whole numbers). Target audience is Java programmers who are curious about what Scala has to offer. Elements covered include operator overloading, structure of pattern matchers, using lazy values to refer to exceptional situations that would otherwise throw, and an example companion object.

I have been following the online course Functional Programming in Scala, offered by EPFL, and decided to extend one of their examples of tail-recursive gcd computation to my own library of rational numbers. .Let’s see how we could do this. . As a reminder, a rational number is any number that can be written in the form a/b, where a and b are integer numbers, b non-zero. The goal of this exercise is to (a) Play around with Scala, showcasing that even the most basic features of the language can provide great benefits on readability and extensibility of code, and (b) Investigate whether our new library can offer time and accuracy improvements during computations that involve many rational numbers, when compared to the alternative that the compiler will do by default: generate the Double value that approximates a / b as good as it can within 32 bits, and work with those approximations. The entirety of the code is available under GPL here (src/main/scala/week2/rational). Let’s begin.

Initialization & Basic Methods

In Scala, we are allowed to execute statements inside a class’ body. Those statements make up the default constructor for the class. For our Rational type, we will do a neat trick: in order to make the various computations faster, we will make sure we reduce the fraction as much as possible during construction. For example, if the user requests of us to create the fraction 10/15, our implementation will reduce that to 2/3, as follows:

class Rational(x:BigInt, y:BigInt) {

import Rational._

require(y != 0, "Cannot create a Rational with denominator 0")

// Simplify representation by dividing both numer and denom by gcd.

@tailrec

private def gcd(a:BigInt, b:BigInt) : BigInt = if(b == 0) a else

gcd(b, a % b)

private val gcd : BigInt= gcd(x, y)

private val numer = x / gcd // Make immutable

private val denom = y / gcd

require will throw an IllegalArgumentException if the caller requests a rational with a denom of 0. The tail-recursive method gcd(a, b) will calculate the greatest common divisor of x and y, and then the constructor will consequently divide x and y and store the resulting values in numer and denom respectively. We use BigInt instead of Int for our inner representation so that we can deal with fractions of really large integers.

Of course, we can define other constructors if we would like, as in Java. For example, since all integers can be expressed as rationals (just give a denom of 1), we can do this:

def this(x:BigInt) = this(x, 1)

Furthermore, and this will be important for our experiments, we want to force the program to output the “pure” representation of our Rational instances instead of dividing them all the time, so we override toString as follows:

override def toString : String = numer + "/" + denom

Such that, instead of println(20/100) printing 0.2, it will now print 1/5. Feel free to browse the code for overridings of equals and hashCode.

Operator Overloading

Among the most discussed drawbacks of Java is lack of operator overloading, something that C++ programmers in particular may find limiting. In Scala, pretty much any alphanumeric string can be a method name, as long as it begins with a non-numeric character. Without doubt, for our rational number API, it would be very convenient for us if we could completely transparently overload the meaning of the classic binary arithmetic operators: +, -, *, /, etc. In our example, we overload all of those, as well as unary –, the caret operator (^) to denote exponentiation, as well as binary comparison operators (>, <, <=, etc). Here are some examples.

def + (that:Rational):Rational = {

require(that != null, "Rational + Rational: Provided null

argument.")

new Rational(this.numer * that.denom + that.numer * this.denom,

this.denom * that.denom)

}

def + (that:BigInt): Rational = this + new Rational(that, 1)

def * (that:Rational):Rational = {

require(that != null, "Rational * Rational: Provided null

argument")

new Rational(this.numer * that.numer, this.denom * that.denom)

}

def * (that:BigInt) = new Rational(numer * that, denom)

We use BigInt instances for the numerator and denominator representation since the stress tests we show later on can produce very large integers. In the example above, given two Rational instances a and b we define what the behavior of + should be in the expression a + b. We also define the behavior of a + i, where i is a BigInt instance. To re-use the existing implementation for a + b, a + i is translated to a + i/1, which is already defined.

For unary operators, Scala requires that we prefix the name of the operator with the keyword unary, to disambiguate them from binary operators:

def unary_- : Rational = new Rational (-numer, denom)

Binary comparison operators:

def > (that: Rational) :Boolean = numer * that.denom > that.numer * denom

def >= (that:Rational) :Boolean = (this > that) || (this == that)

def > (that:BigInt) : Boolean = this > new Rational(that, 1)

def >= (that:BigInt) : Boolean = {

val r = new Rational(that, 1)

(this > r) || (this == r) // Scala calls "equals" when "==" is

} //invoked

def < (that:Rational) : Boolean = !(this >= that)

def <= (that:Rational) : Boolean = !(this > that)

def <(that:BigInt): Boolean = {

!(this >= that)

}

def <= (that:BigInt):Boolean = {

!(this > that)

}

Re-using existing overloadings as much as we can. It’s a huge syntactic relief to be able to implement functionality via universally recognized operators instead of having to implement ugly methods like plus, minus, multiply, subtract,… And as we will see later, it makes it possible to alter the semantics of an entire computation chain just by changing one line of code!

Say “no” to bloated pattern-matching operators!

Notice in the code above that we overload the overloaded operators themselves by writing a different method for every type of argument. This seems a bit tedious at first, and one might think: isn’t it more of a “functional” approach to take an argument of type Any and then pattern match against it? Like our overriding of the equals method does:

private def canEqual(x:Any):Boolean = x.isInstanceOf[Rational]

private def sameRational(that:Rational ): Boolean =

(numer == that.numer) && (denom == that.denom) ||

(numer == -that.numer) && (denom == -that.denom)

override def equals(that:Any):Boolean = {

that match {

case that:Rational => that.canEqual(this) && sameRational(that)

case that:BigInt => sameRational(new Rational(that, 1))

case _ => false

}

}

For example, we could do something like this:

def + (that:Any):Rational = {

require(that != null, "+(Rational, Any): Provided null

argument.")

that match {

case that:Rational => new Rational(this.numer * that.denom

+ that.numer * this.denom,

this.denom * that.denom)

case that:Int | BigInt => new Rational(this.numer + that *

this.denom, this.denom)

case that:Double => /* ...*/

case _ => throw new UnsupportedOperationException

("+(Rational, Any): Unsupported operand.")

}

}

Based on the discussion here, I was able to ascertain that this would be a terrible idea, because it would change a compile-time error to a runtime error. For instance, with our current implementation which only overloads + for BigInt and Rational arguments, the code snippet:

val y = new Rational(10, 11) y + 2.4

does not compile:

Demonstration of compile-time error when attempting to use an operator on unknown types. String is also an expected type because it’s possible to concatenate the output of Rational::toString with the string literal 2.4 to produce the concatenated String "10/112.4".

On the other hand, if we implement + in the way above, the code would be prone to a runtime error, which is of course not preferred. So no matter how Java-esque and tedious it might seem, the multi-method way seems like the better solution.

Lazy evaluation of expressions that throw

The keyword lazy val in Scala does exactly what you think: it defines an expression head = body where body is evaluated only at the time of call of head. This can sometimes lead to interesting behavior. In the companion object to Rational, we can define some constants:

object Rational {

val ONE = new Rational (1, 1)

val ZERO = new Rational (0, 1)

val MINUSONEOVERONE = new Rational(-1, 1)

val ONEOVERMINUSONE = new Rational(1, -1)

lazy val ZEROOVERZERO = new Rational(0, 0)

}

Note that the last constant, representing the undefined form 0/0, is a lazy val. Its RHS is a constructor call with both arguments set to zero. However, as a reminder, whenever a denominator of zero is provided to the Rational constructor, we make sure to throw an instance of IllegalArgumentException:

require(y != 0, "Cannot create a Rational with denominator 0")

Since the constant is lazy, its RHS, which leads to the exception throwing, will not be evaluated until after the companion object has been brought to life! This means that the class Rational itself has the capacity to internally define how it wants exceptional instantiations to behave! In this case, by “exceptional” instantiation we are referring to the undefined form 0/0 which has been encoded as a constant in the companion object, but we could imagine all sorts of classes with exceptional instantiations that might need to be documented! Especially for applications which monitor global state, where variables are mutated irrespective of what’s going on in the current thread’s call stack, having the capacity to define special cases of a type can be powerful!

Since all the statements within the body of the companion object have to be evaluated (unless lazy!) before the companion object can be brought to existence, stripping away the lazy keyword from the assignment’s LHS would not allow for object construction when requesting the constant ZEROOVERZERO, or any other constant for that matter. Feel free to pull the code, take away the keyword lazy from the line that defines ZEROOVERZERO and see that either one of those statements will throw:

object LazyEval extends App {

import Rational._

// ONEOVERMINUSONE // This won't work if `ZEROOVERZERO` *ISN'T* lazy

// ZEROOVERZERO // Or this

}

It turns out that it is not possible to emulate this behavior in Java, that is, have an expression that is part of a type (so, having to be evaluated at construction time!) be evaluated lazily. Consider the following type:

public class Uninstantiable {

public static int badField = willThrow();

public static int willThrow() {

throw new RuntimeException("willThrow()");

}

}

Despite the fact that we only define a static field and method, Uninstantiable is indeed uninstantiable:

public class UninstantiableRunner {

public static void main(String[] args) {

// Cannot instantiate;

new Uninstantiable();

}

}

This main will throw an instance of ExceptionInInitializerError:

Now, care must be paid to ensure that nobody thinks we are making the wrong claim. It is not the claim that lazy behavior cannot be implemented in Java. It absolutely can, and it’s a well-known and widely – used pattern. Here’s a simple example:

public class JavaLazyVal {

private long val;

// Pay once.

public long getVal() {

if (val == 1) {

System.out.println("Performing an expensive

computation...");

val = (long) Math.pow(3, 10); // Assumed expensive. Have

// to ensure that value

// CAN'T be equal to the

// initializer value!

} else {

System.out.println("No longer performing expensive

computation...");

}

return val;

}

public JavaLazyVal() {

val = 1;

}

}

A simple runner program:

public class JavaLazyValRunner {

public static void main(String[] args) {

JavaLazyVal jlv = new JavaLazyVal();

System.out.println("val = " + jlv.getVal());

System.out.println("val = " + jlv.getVal());

}

}

And its output:

So it is absolutely possible to have lazy behavior in Java and this is a very known pattern for Java programmers. It’s not the claim that this can’t be done. The claim is three-fold. First, in Scala you can do this much, much more concisely:

object LazyEval extends App {

lazy val x = { // Entire scopes can be rvalues of Scala expressions

println("Evaluating x")

scala.math.pow(3, 10)

}

println(x) ; println(x)

}

/* Output:

*

* Evaluating x

* 59049.0

* 59049.0

*/

Second, the Java code that we had to – necessarily – introduce is open to one possible source of logical error: that the expensive computation also returns the initializer value! In some applications, this can be hard to ensure!

Third, with the mechanism of lazy val, you can store expressions which would otherwise prohibit the construction of a class instance! And that can have power.

Tail-recursive repeated squaring

This isn’t in any way Scala or Functional Programming – specific, just something cool that can be written rather consisely in this language / paradigm. We overload the exponentiation operator (^) to perform repeated squaring with a tail-recursive method:

def ^ (n:Int):Rational = {

// Inner two-arg function pow/2. Not tailrec:

// the inner method pow/4 is tailrec.

def pow(base:BigInt, exp:Int):BigInt = {

require(exp >= 0 && base >=0, "We need positive integer

arguments for this method.")

// Power computation with tail-recursive repeated squaring.

@tailrec

def pow(currExp:Int, maxExp:Int, currTerm:BigInt,

prodAccum:BigInt): BigInt = {

assert(currExp <= maxExp, "The current exponent should never

surpass the original one.")

if(currExp <= maxExp / 2)

// Next iteration on current term.

pow(2*currExp, maxExp, currTerm * currTerm, prodAccum)

else if (currExp == maxExp)

// Bottomed out.

currTerm * prodAccum

else

// Compute residual product (rest of terms)

// using the same method.

pow(1, maxExp - currExp, base, currTerm * prodAccum)

}

if (base == 0 && exp == 0) throw new IllegalArgumentException

("0^0 is an undefined form.")

else if(base == 0 && exp > 0) 0

else if(base > 0 && exp == 0) 1

else if(exp == 1) base

else if(base == 1) 1

else pow(1, exp, base, 1) // Call to tailrec pow/4

}

if(n == 1) this

else if(n == 0) ONE

// Calls to (non-tailrec) pow/2

else if(n < 0) new Rational(pow(denom, -n), pow(numer, -n))

else new Rational(pow(numer, n), pow(denom, n))

}

}

The innermost 4-arg method pow is of interest here. The snippet (3 / 2)^19 ends up translated to the calls pow(1, 19, 3, 1) for the numerator 3^19and pow(1, 19, 2, 1) for the denominator 2^19 in the line else new Rational(pow(numer, n), pow(denom, n)). Observe that 3^19 = 3^16 * 3^2 * 3^1. Through the machinery of repeated squaring, the first if condition in pow/4 iterates (literally) over all the possible values of the current exponent that wouldn’t “surpass” the maximum exponent if squared. Through 4=log_2(16) iterations, it will calculate the value of 3^16 and then, using the recursive call pow(1, maxExp - currExp, base, currTerm * prodAccum), it can take the intermediate computation into consideration through the product currTerm*prodAccum.

Stress testing

So, all this is cool and all, but how does this class fare in practice? We want to make two measurements:

(1) How well does our Rational type perform in terms of accuracy and time? To measure this, we run two map-reduce rasks on a large chain of rational numbers under both the Double and Rational representations, and compare results. We generally expect that we will be winning in accuracy but losing in time, since we have to spend significant time on calls to the Rational constructor.

(2) How well does our exponentiation method scale when compared to scala.math.pow, in terms of speed?

To measure time we used the following method presented here:

def time[R](block: => R, msg:String): R = {

val t0 = System.nanoTime()

val result = block // call-by-name

val t1 = System.nanoTime()

println("Elapsed time for " + msg + " was " + (t1 - t0) + "ns")

result

}

And use the following script for the first experiment:

println("======= EXPERIMENT 1: ACCURACY AND EFFICIENCY OF STANDARD

MAP-REDUCE ========== ");

final val rng = new Random(47)

final val MAX_ITER = 1000 // Vary

final val MAX_INT = 100 // Vary

val intTupleSeq : Seq[(Int, Int)]= for (_ <- 1 to MAX_ITER) yield

(rng.nextInt(MAX_INT) + 1,

rng.nextInt(MAX_INT) + 1)

val quotientSeq : Seq[Double] = intTupleSeq map { case (a, b) => a /

b.toDouble }

val rationalSeq : Seq[Rational] = intTupleSeq map { case (a, b) =>

new Rational(a, b) }

// Sums first....

val quotientSum = time( quotientSeq.sum, "quotient sum")

val rationalSum = time(rationalSeq.reduce((x, y) => x+y), "Rational

sum")

println("Value of quotient sum:" + quotientSum)

val evaluatedRationalSum = rationalSum.eval

println("Value of Rational sum:" + evaluatedRationalSum)

println("Error: " + Math.abs(quotientSum - evaluatedRationalSum))

// Products second...

val quotientProd = time( quotientSeq.product, "quotient product")

val rationalProd = time(rationalSeq.reduce((x, y) => x*y), "Rational

product")

println("Value of quotient product:" + quotientProd)

val evaluatedRationalProd = rationalProd.eval

println("Value of Rational product:" + evaluatedRationalProd)

println("Error: " + Math.abs(quotientProd - evaluatedRationalProd))

Keep in mind that the bigger the error, the better the case for our Rational type, since up to the point of the call to eval it has admitted zero representational degradation.

Task 1: Simple Map - Reduce

We run two simple Map-Reduce tasks: sum and product over a list of 1000 randomly distributed strictly positive Ints. We vary the largest possible Int and generate time and error metrics for each.

Sum times:

We see that generating the chain of sums for the Rational type takes about seven-fold more time than that of generating the simple primitive Int sum. This is to be expected, since there are costs associated with claiming memory from the heap and performing several assignments. We should also remember that the Rational constructor performs the GCD reduction aggressively during construction, and the runtime of that algorithm is affected by the magnitude of the bigger of the two integers for which it is called.

What about accuracy?

Remember: the greater the error, the better for our Rational type. It seems as if we have to go all the way to the 12th decimal digit to find any appreciable difference.

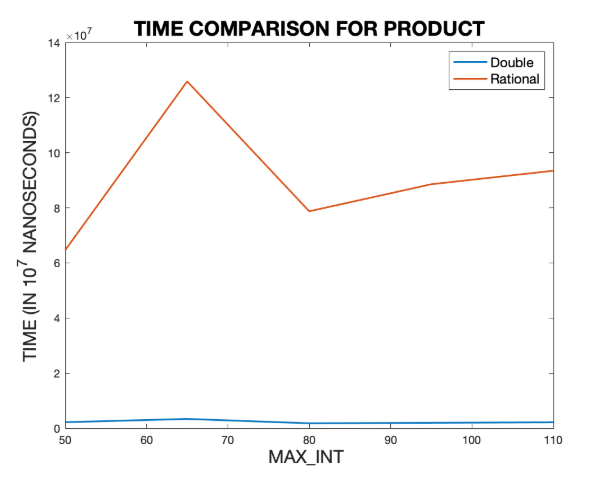

When it comes to the product task:

Now this is somewhat surprising, since it seems that the Rational type’s difference in execution speed is even greater than the case of sum. Once again, here is the source for +(Rational, Rational) and *(Rational, Rational).

def + (that:Rational):Rational = {

require(that != null, "Rational + Rational: Provided null

argument.")

new Rational(this.numer * that.denom + that.numer * this.denom,

this.denom * that.denom)

}

def * (that:Rational):Rational = {

require(that != null, "Rational * Rational: Provided null

argument")

new Rational(this.numer * that.numer, this.denom * that.denom)

}

This code describes the formulae for addition of a / b + c / d and (a/b) * (c/d). We see that in the constructor call of + we pay 4 additions / multiplications, whereas in the constructor call of multiplication * we pay 2 mults. So it’s definitely not the number of operations, but the cost of those operations, particularly when BigInt instances are involved. And, in a product formulation, BigInts can get really big.

What about product error?

Smaller benefits than the sum (note the different scale, from $10^-12$ to $10^-16)$, to the point of not even being appreciable for small values! No idea why this happens. In all reality, I should have averaged all of those graphs over a number of calls to generate higher fidelity points.

One thing to consider as well is that in order to even produce error graphs, we need a way to evaluate the Rational instance analytically:

def eval: Double = numer.doubleValue() / denom.doubleValue()

Task 2": Repeated squaring

Just for fun, I elected to run an experiment to see how our overloading compares to scala.math.pow. Since $a^n$ can be computed in about $\log_2n$ steps using repeated squaring, we vary the exponent $n$ and keep the base at $\frac{3}{2}$ (1.5 in the Double representation). The computations are of course accurate (Error = 0). We are exclusively interested in time.

Results:

println("=========== EXPERIMENT 2: EFFICIENCY OF

EXPONENTIATION ========= ")

final val EXPONENT = 500

var quotientPower: Double = 0.0

var rationalPower : Rational= _

time(quotientPower = scala.math.pow(1.5, EXPONENT), "Double raised

to the power of " + EXPONENT)

time(rationalPower = new Rational(3, 2) ^ EXPONENT, "Rational raised

to the power of " + EXPONENT)

println("Value of power from Doubles: " + quotientPower + ".")

println("Value of power from Rationals: " + rationalPower.eval +

".")

println("Error: " + Math.abs(quotientPower - rationalPower.eval))

It is laughable to even assume that a custom implementation would in any way compare to scala.math.pow, but what is interesting is the fact that both implementations appear to scale very well as the exponent is increased. This is to be expected because of the power of the logarithm, which “tempers” even large values of n to very tractable values of magnitude ceil(log_2n).

Conclusion

In this post, we saw several advantages of the Scala implementation of a rather simplistic numerical type over the relevant Java implementation. The big advantages have to do not with the runtime, but with the source. In Scala, we are able to overload operators naturally, like with C++, albeit with more intuitive syntax and the usual perks of not having to deal with raw pointers or memory cleanup (if ever required in the overloading of some operator…). We can also use the power of built-in lazy vals in order to do cool things such as allow for types to define their own exceptional instances and deal with them in any way they please (even dynamically). We also compared two different ways of overloading methods in Scala, and deduced that it would be far better to actually emulate the Java-like way of several methods with the same name, instead of pattern – matching on an argument of type Any, as is the more “natural” way in Scala. Finally, we saw a simple application of the language’s Syntax and its emphasis on tail recursion and inner methods with our repeated squaring method.

object Runner extends App {

private def fraction(a:Int, b:Int) = {

require(b!= 0, "Cannot create a fraction with denominator zero.")

new Rational(a, b)

// a.toDouble / b.toDouble

}

val a = fraction(5, 6)

val b = fraction(2, 3)

val c = fraction(1, 2)

val d = fraction(10, 11)

val e = fraction(25, 15) // Rational will reduce to 5/3

val f = fraction(23, 13)

val g = fraction(230, 17)

println(a * b + c*d - e * (f + g))

}

The output of the above code as given is: -535765/21879, whereas, if we uncomment the final line of fraction(Int, Int), we have -24.487636546460074, the evaluated fraction. And the only thing we need change is the inclusion or exclusion of the character string //.

All that said, I would definitely not implement numerical software on top of the JVM. C / C++ / Native Assembly is the only way to go if we want to go that low-level. Furthermore, it is not clear to me, a person who is not professionally involved with commercial – grade numerical software, how a 12th decimal digit difference in accuracy can in any way be significant. Perhaps NASA and SpaceX care about such small differences, to make sure that autonomous vehicles land on Mars and not Venus. Perhaps not. Perhaps the advantage of being able to move to smaller bit widths (Arduino, IoT devices) would ratify the use of a “pure” numerical type such as our Rational. The matter of symbolic computation is huge, interesting, important, and with this blog post we are not even making a ripple on the surface.